Artificial intelligence is becoming a foundational part of how businesses operate, from automated decision-making to personalized recommendations and predictive analytics. As adoption accelerates, so do the risks. Bias, discrimination, lack of transparency, and regulatory non-compliance are no longer theoretical concerns—they’re live issues that demand structured oversight.

A responsible AI audit is a formal, structured evaluation of an AI system’s behavior, performance, and alignment with ethical and regulatory standards. It acts as a diagnostic and accountability mechanism that helps organizations ensure their AI systems are fair, transparent, safe, and compliant with emerging laws. This process increasingly includes LLM audit procedures, which specifically focus on the risks and performance of large language models in real-world applications.

For organizations ready to begin or strengthen their AI governance journey, the Pacific AI Policy Suite provides a clear and actionable foundation. This free resource translates over 80 global and sector-specific AI laws and standards into a unified set of policies. The suite is regularly updated, easy to implement, and designed to be operational—helping teams adopt ethical AI practices and reduce legal exposure from the start. If your team is considering a formal audit or enterprise rollout, you can contact Pacific AI here to explore professional audit and compliance services.

Why AI Audits Are Important Today

AI systems increasingly power critical decisions in hiring, lending, insurance, medical diagnoses, and more. But with power comes responsibility. The more deeply these systems influence people’s lives, the greater the need for independent evaluation through a responsible AI audit.

AI governance audits help close the accountability gap. They uncover hidden biases that may disadvantage certain groups. They evaluate transparency and explainability, ensuring decisions can be understood by users and regulators. They help assess compliance with regulations like the EU AI Act or HIPAA, and ensure proper governance is in place to mitigate risk.

As generative AI grows in adoption, a LLM audit becomes especially important for organizations deploying large language models to ensure their outputs are consistent, safe, and legally defensible. Without audits, organizations risk not only ethical failures, but also reputational harm, legal action, and regulatory penalties. In a world where AI decisions increasingly impact real people, trust depends on validation.

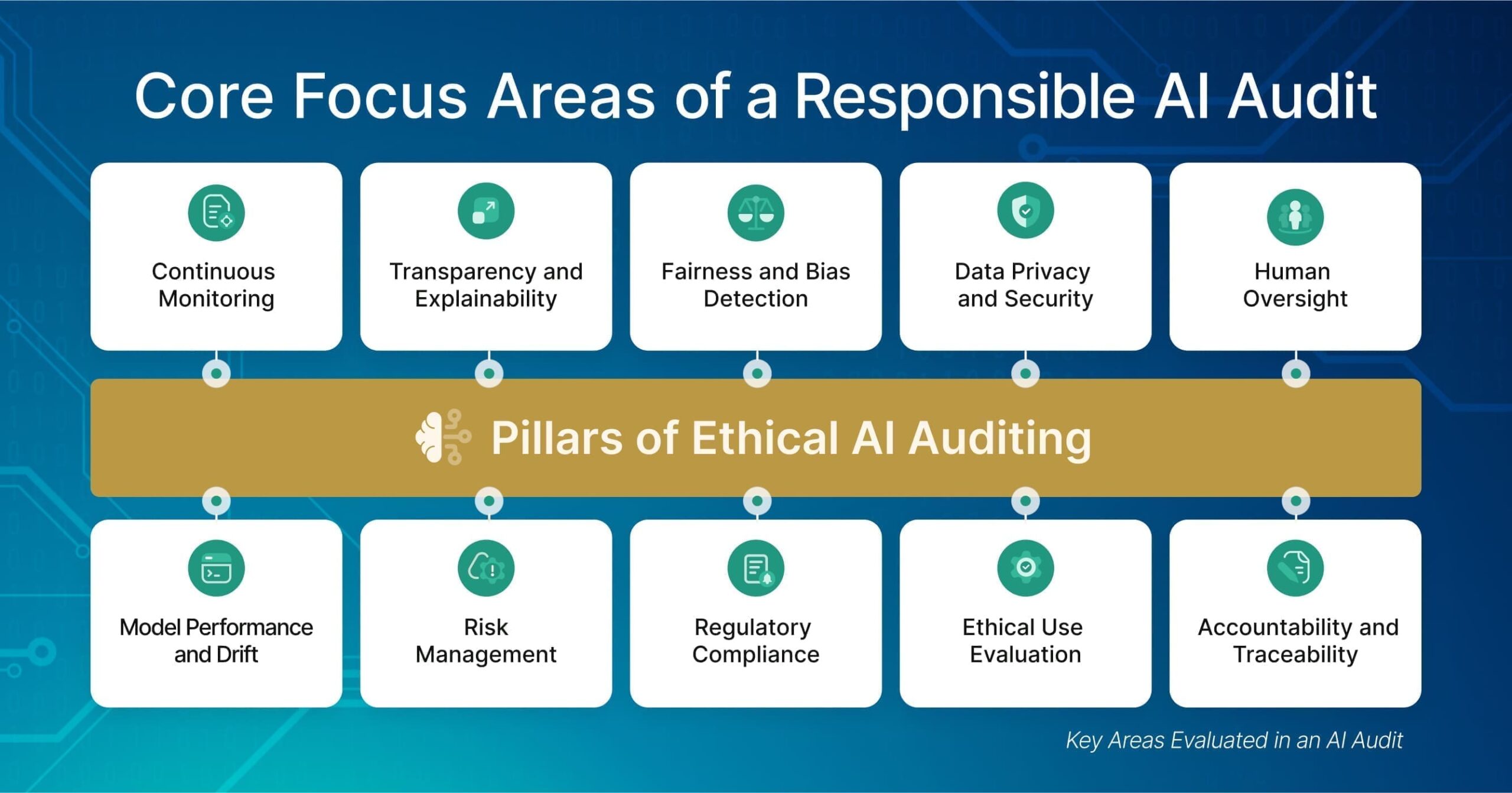

Key Areas Evaluated in an AI Audit

A comprehensive responsible AI audit looks across the entire lifecycle of an AI system. It begins with the data—how it’s collected, labeled, and used for training. Auditors assess whether the data is representative, unbiased, and secure. They examine the model’s design and performance, probing for algorithmic bias, drift, and reliability under different conditions. In the case of large language models, LLM audit criteria include hallucination frequency, content safety, prompt injection vulnerability, and alignment with intended use.

Transparency is another core focus. Auditors review whether the system’s logic can be explained to users or regulators, especially in high-risk domains. They also test for fairness and alignment with ethical principles, and evaluate whether human oversight mechanisms are in place to intervene when something goes wrong.

Finally, the audit examines compliance: Does the AI system align with sector-specific rules, such as those governing healthcare data, financial decision-making, or public sector transparency? And are there processes in place for continuous monitoring and improvement?

What role do AI governance audits play in ensuring ethical AI deployment?

Ethical deployment of AI requires more than good intentions. It demands mechanisms for verification. AI audits enforce fairness by testing how systems perform across different demographics. They evaluate how transparent and explainable systems really are, and they highlight where accountability may be lacking.

By tying ethical principles to measurable standards, audits bring governance into practice. They reduce the risk of harm, improve user trust, and help organizations demonstrate they take ethics seriously.

What are the implications of AI audits in mitigating the risks associated with AI implementation?

AI systems can amplify existing biases or introduce new ones. They may fail under edge cases or misinterpret ambiguous inputs. Without oversight, these risks go unchecked. A responsible AI audit acts as an early-warning system, identifying and mitigating issues before they escalate.

Audits also surface structural risks: misuse of sensitive data, lack of informed consent, or gaps in human oversight. They help businesses deploy AI systems more safely, responsibly, and in line with legal and societal expectations.

Who Needs an AI Governance Audit?

Any organization using AI in sensitive, high-impact, or regulated environments stands to benefit. Healthcare providers deploying clinical AI tools must ensure model transparency and patient safety. Financial institutions using AI for credit scoring or fraud detection must guard against bias and discriminatory practices.

Public sector agencies using AI for benefits allocation or predictive policing face unique ethical and legal challenges. And increasingly, enterprises of all kinds are being held to account for how they use AI internally—whether in hiring, marketing, or customer service.

AI audits help these organizations manage risk, ensure fairness, and build trust in the systems they use and the decisions they support. A responsible AI audit strengthens this process by providing independent verification that safeguards are effective and aligned with ethical and regulatory expectations.

AI Audits and Regulatory Compliance

Around the world, regulators are setting stricter standards for how AI systems are governed. The EU AI Act introduces tiered risk classifications, documentation requirements, and post-market surveillance. In the U.S., HIPAA, the FTC Act, and state-level privacy laws create legal obligations around data use and fairness.

The Pacific AI Policy Suite was built to help organizations operationalize these requirements. It translates a growing body of legal and ethical standards—now spanning more than 80 global regulations—into actionable policy templates that can be applied to each AI use case. By aligning audits with this suite, organizations can not only meet compliance standards, but demonstrate proactive governance to customers, partners, and regulators.

What are the main challenges in conducting AI audits?

AI audits face several practical and technical hurdles. Black-box models, such as large language models and deep neural networks, often lack interpretability. This makes it difficult to explain decisions or assess how inputs influence outputs.

Data privacy is another barrier. Auditing a system may require access to sensitive inputs or decision records, which must be handled under strict compliance controls. Meanwhile, evolving standards and inconsistent regulations create uncertainty for global organizations.

Finally, audits require multidisciplinary expertise—from data scientists and legal experts to ethicists and product leaders. Without cross-functional collaboration, it can be difficult to scale audits across departments and geographies.

Explore more in our piece on Generative AI Governance and the complexities of auditing foundation models in clinical settings.

What are the best practices for conducting regular audits of AI systems?

Effective AI governance audits are built on consistent, well-documented processes. Organizations should start by defining clear audit criteria based on risk level, regulatory context, and ethical standards. Audits should be repeatable and adaptive, evolving as models change or use cases expand. A responsible AI audit framework ensures that these processes remain transparent, measurable, and aligned with best practices.

Involving diverse, cross-functional teams strengthens both the rigor and relevance of an audit. Documentation is key: every step, from data review to decision tracing, must be recorded to support traceability and legal defensibility.

As systems scale, automation becomes essential. Tools like LangTest allow for continuous, stress-tested validation.

The Future of Responsible AI and Auditing

AI governance is entering a new era. As generative models become more capable and more ubiquitous, traditional audit approaches must evolve to match their complexity. Future audits will rely more heavily on real-time monitoring, explainability tools, and policy frameworks that can adapt quickly to new risks. A responsible AI audit framework will play a central role in this shift, ensuring transparency, accountability, and continuous oversight as AI systems grow more advanced.

Auditing won’t be a one-off event. It will be a continuous, lifecycle-driven practice supported by automation and informed by evolving best practices. Organizations that embrace this mindset now will be better positioned to innovate responsibly.

For more on what this looks like in practice, read our piece on Generative AI Governance in Healthcare.

Responsible AI is not just about technology—it’s about trust. Audits help organizations bridge the gap between innovation and accountability. They provide the checks and balances needed to ensure AI is used ethically, safely, and in line with the values of the communities it serves.

Whether you’re deploying AI in healthcare, finance, or HR, now is the time to act. Download the Pacific AI Policy Suite to get started, or contact us to explore a professional AI governance audit – including LLM audit services – tailored to your organization.

FAQ

What is a Responsible AI audit and why is it important?

A Responsible AI audit systematically evaluates AI systems—covering algorithms, data inputs, decision logic, and outputs—to ensure alignment with ethical, fairness, privacy and compliance standards. It helps identify bias, data misuse, and safety concerns, building trust and regulatory readiness.

What are the key elements evaluated during an AI audit?

Audits typically examine data governance, bias detection, privacy protection, transparency, robustness, accountability, compliance with regulations, and stakeholder oversight. These elements help ensure systems are lawful, ethical, and resilient.

Who should perform Responsible AI audits?

Audits can be conducted internally (by dedicated governance or audit teams) or externally by independent experts. Effective audits require white-box access to code, training data, and system documentation—beyond simple output reviews.

How often should organizations conduct AI audits?

Regular audits are recommended, especially for high-risk systems or when models are updated. Continuous or periodic evaluations ensure emerging risks are caught and AI systems remain aligned with evolving ethical and legal standards.

What are the main benefits of conducting AI audits?

AI audits help build stakeholder trust, support compliance with emerging regulations (like EU AI Act and GDPR), improve fairness, detect vulnerabilities, and prevent reputational or legal harm from unethical or biased AI decisions.