US AI regulation importance cannot be overstated for the entire industry and its global dominance. It affects both domestic and international markets. Currently, US AI oversight is fragmented and sector-specific patchwork rather than a comprehensive federal law. The FDA authorizes medical devices, HHS enforces HIPAA, and the FTC targets algorithmic bias through Operation AI Comply. With 1,247 AI devices authorized and $145 million in penalties collected, organizations face enforcement now, not later. Understanding this multi-agency landscape is critical for compliance and competitive advantage. [1, 4]

Why AI Regulation Matters in the United States

AI systems now make consequential decisions affecting patient diagnoses, loan approvals, hiring outcomes, and insurance coverage. When these systems fail or discriminate, the harm extends beyond individual cases to undermine trust in entire industries.

Bias in AI systems represents a persistent challenge. Researchers continue to document how AI models can reflect and amplify societal biases related to gender, race, disability, and socioeconomic status. Unveiling Bias in Language Models demonstrates how these biases manifest across demographic dimensions, creating disparate impacts that violate civil rights protections.

Enforcement actions signal regulatory priorities. The FTC launched Operation AI Comply in September 2024, announcing five enforcement actions against companies using AI deceptively. HHS has collected nearly $145 million in HIPAA penalties since 2003 across 152 enforcement actions. [1]

Ethical AI practices require more than voluntary commitments. Responsible AI development must be codified into enforceable standards that protect individuals while enabling innovation. The question is not whether to regulate AI, but how to do so effectively across a complex, multi-stakeholder ecosystem.

Current Regulatory Landscape: Fragmented but Evolving

The US AI regulation landscape is characterized by a series of sector-specific rules, rather than a unified federal legislative framework. Multiple agencies share fragmented AI oversight and responsibilities. The FDA regulates medical devices, HHS enforces healthcare privacy rules, the FTC monitors consumer protection, the EEOC addresses employment discrimination, and NIST develops technical standards. Each agency applies existing statutory authorities to AI within its domain.

State Law Fragmentation

Key state requirements create additional compliance:

- Colorado: AI Act (SB 24-205) effective June 30, 2026, mandates risk management for high-risk systems.

- California: CCPA/CPRA requires transparency for automated decision-making.

- Illinois: BIPA regulates biometric data collection and processing.

- New York City: Local Law 144 mandates bias audits for employment AI tools.

Governance Gap and Need for Unified Frameworks

Congress continues considering comprehensive AI legislation while federal agencies coordinate approaches. Organizations face overlapping jurisdiction with no single authority providing holistic AI governance in the US. Companies require AI governance platform to map systems across jurisdictions and track regulatory changes. Manual compliance tracking becomes untenable as AI deployments scale.

Key Federal Agencies Involved in AI Oversight

Several federal agencies exercise significant authority over AI systems, each bringing unique expertise and enforcement powers:

Food and Drug Administration (FDA)

The FDA uses a risk-based framework to regulate AI medical devices. Its 2021 Action Plan for AI/ML Software as a Medical Device (SaMD) allows predetermined change control plans. These let algorithms update without new submissions if changes stay within pre-set limits. [2] Since 2025, it has centered around a comprehensive lifecycle-based regulatory framework. Led to the January 2025 draft guidance titled “Artificial Intelligence-Enabled Device Software Functions: Lifecycle Management and Marketing Submission Recommendations,” which outlines expectations for managing AI/ML-enabled medical devices throughout their total product lifecycle (TPLC). By May 2025, the FDA had authorized 1,247 AI devices. This shows exponential growth from prior years. Healthcare systems show 88% AI adoption, but only 18% have mature governance. [3, 4]

Federal Trade Commission (FTC)

The FTC enforces consumer protection laws against deceptive AI practices. Operation AI Comply, launched in September 2024, announced five enforcement actions targeting companies making false AI claims or using AI in unfair ways. The FTC focuses on “AI washing”, misleading marketing about AI capabilities, and algorithmic discrimination that violates consumer protection standards. [5]

Department of Health and Human Services (HHS)

HHS ensures AI systems comply with HIPAA privacy and security rules. Since April 2003, HHS has received 374,321 HIPAA complaints, resolved 31,191 cases with corrective actions, and collected $144,878,972 in penalties across 152 enforcement actions. [6] The Office for Civil Rights has clarified that AI vendors processing protected health information typically qualify as business associates, triggering HIPAA compliance obligations. [7]

Equal Employment Opportunity Commission (EEOC)

The EEOC prevents discriminatory AI use in hiring and employment decisions. The agency enforces Title VII of the Civil Rights Act, the Americans with Disabilities Act, and other civil rights laws when AI tools produce discriminatory outcomes. Recent guidance emphasizes that employers remain liable for AI vendor discrimination. [8]

National Institute of Standards and Technology (NIST)

NIST AI framework develops voluntary AI standards that influence regulatory approaches across agencies. The AI Risk Management Framework (AI RMF) provides structured guidance for identifying, assessing, and mitigating AI risks. While voluntary, NIST standards often become de facto requirements as agencies reference them in enforcement actions. [9]

Department of Justice (DOJ)

The DOJ enforces civil rights laws when AI systems discriminate in areas like housing, lending, and public accommodations. The department has signalled increased focus on algorithmic discrimination and is developing guidance jointly with other agencies.

The DOJ maintains an AI and Civil Rights resource page with guidance documents, enforcement actions, and coordination initiatives. [10]

Major Legislative Proposals and Executive Orders

Federal policymakers have pursued AI governance through both executive action and proposed legislation, though comprehensive federal AI law remains elusive.

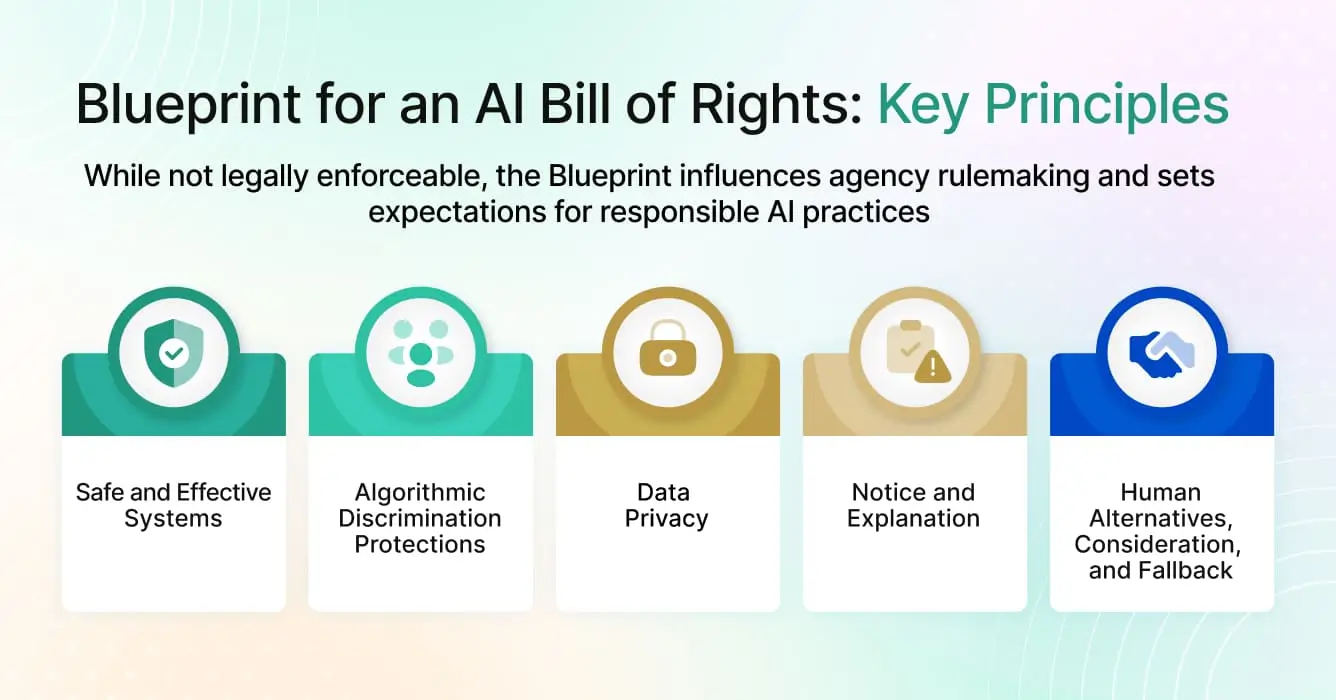

AI Bill of Rights Blueprint

The White House Office of Science and Technology Policy released the Blueprint for an AI Bill of Rights in October 2022. This non-binding framework identifies five principles:

- Safe and effective systems through testing and monitoring

- Algorithmic discrimination protections

- Data privacy safeguards

- Notice and explanation when AI affects decisions

- Human alternatives and fallback options

While not legally enforceable, the Blueprint influences agency rulemaking and sets expectations for responsible AI practices.

Executive Order 14110

President Biden issued AI Executive Order 14110 on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence on October 30, 2023. The order directed federal agencies to develop AI safety standards, protect privacy, advance equity, and promote innovation. It required companies developing large AI models to report training activities and established new guidelines for federal AI procurement.

However, the order was revoked in January 2025, demonstrating how AI policy can shift between administrations.

Algorithmic Accountability Act of 2025

This is a proposed bill; if passed, it would make companies run impact checks on high-risk AI. These checks include performance, bias, discrimination, privacy, and security risks. The bill keeps getting introduced, however hasn’t passed yet. [11]

National AI strategy and US AI legislation continue to evolve. Organizations are now developing testing for Generative AI capabilities, rather than waiting for federal mandates. Proactive testing identifies bias, validates performance, and documents compliance efforts that regulators increasingly expect.

Sector-Specific AI Regulations (Healthcare, Finance, Employment)

Different sectors face distinct AI compliance requirements based on existing laws and industry-specific risks:

Healthcare AI Compliance

AI in healthcare compliance for healthcare organizations consists of multiple regulatory frameworks:

- HIPAA requires Business Associate Agreements with AI vendors, technical safeguards for protected health information, and comprehensive audit trails.

- FDA device regulation applies when AI diagnoses, treats, or prevents disease, with requirements varying by risk classification. [4]

- State medical practice laws may restrict AI’s role in clinical decision-making.

- Anti-discrimination laws prohibit AI systems that produce disparate health outcomes based on protected characteristics. [12]

The intersection of these requirements creates complex compliance obligations. A clinical decision support system might simultaneously fall under FDA device regulation, HIPAA privacy rules, and civil rights protections. Generative AI governance in healthcare addresses these unique challenges through specialized frameworks.

Finance Sector AI Rules

Financial services firms face stringent oversight of AI in finance regulation:

- Fair Lending laws (Equal Credit Opportunity Act, Fair Housing Act) prohibit discriminatory lending algorithms.

- Fair Credit Reporting Act requires accuracy, fairness, and transparency in credit decisions.

- Bank Secrecy Act and anti-money laundering rules apply to AI-powered transaction monitoring.

- SEC regulations govern AI use in investment advice and trading.

Regulators scrutinize AI models for disparate impact on protected classes. The Consumer Financial Protection Bureau has emphasized that fair lending laws apply equally to human and algorithmic decisions.

Employment AI Guidance

AI in employment law obliges workplace systems to comply with:

- Title VII of the Civil Rights Act prohibits employment discrimination.

- Americans with Disabilities Act requires reasonable accommodations.

- Age Discrimination in Employment Act protects older workers.

- State laws like New York City’s Local Law 144 require bias audits for automated employment decision tools.

The EEOC AI guidance clarifies that employers remain liable when AI vendors’ tools produce discriminatory outcomes. Organizations cannot outsource legal responsibility to technology providers.

Challenges in Regulating AI Across States and Sectors

The fragmented regulatory landscape creates substantial challenges for organizations deploying AI at scale across states:

Enforcement gaps

No single agency has comprehensive oversight. Systems may violate multiple laws simultaneously, but enforcement depends on which agency investigates. Limited federal-state coordination creates uncertainty.

Innovation outpacing policy

AI capabilities advance faster than regulatory frameworks. Generative AI emerged before oversight mechanisms existed. New applications challenge legal categories: Does an AI chatbot providing medical information constitute practicing medicine?

Sectoral silos

Agencies regulate within traditional domains without cross-sector coordination. Modern AI systems often span multiple sectors simultaneously, creating compliance complexity.

Resource constraints

Regulators lack technical expertise to evaluate complex AI systems. Existing laws predate AI technology, creating uncertainty about liability, explainability requirements, and decision-making boundaries.

Calls for Federal AI Legislation and What It Could Include

A growing consensus emerges among experts, industry leaders, and policymakers that comprehensive federal AI legislation is necessary to address regulatory fragmentation.

Why federal legislation matters

A national framework would:

- Establish consistent standards across states, reducing compliance complexity.

- Close gaps in current oversight by creating comprehensive requirements.

- Provide legal clarity for organizations and regulators.

- Enable US competitiveness by creating a predictable regulatory environment.

- Protect individuals through enforceable rights and remedies.

Key components of proposed federal AI laws:

Transparency requirements

Legislation would likely mandate disclosure when AI makes or substantially influences consequential decisions. Organizations would need to explain AI systems’ purposes, data sources, and decision-making logic in accessible language.

Bias testing and mitigation

Under AI bias regulation, federal law may require regular bias assessments across demographic categories. Organizations would need to test AI systems before deployment and monitor for discriminatory patterns during operation. Remediation requirements would apply when bias is detected.

Accountability mechanisms

Proposed frameworks include:

- Clear liability standards for AI-caused harms.

- Requirements for human oversight of high-risk decisions.

- Audit trails documenting AI system operations.

- Incident reporting when AI systems cause harm.

Risk-based regulation

Most proposals adopt tiered approaches based on AI risk levels. High-risk systems affecting health, safety, civil rights, or employment would face stringent requirements. Such systems might also require robust AI transparency rules and AI accountability protocols. Lower-risk applications would have lighter compliance obligations.

Enforcement protocols

Federal legislation would likely establish:

- Designated enforcement agencies with AI expertise.

- Civil penalties for violations.

- Private rights of action for affected individuals.

- Regular reporting requirements for high-risk AI deployers, often fulfilled via certified AI compliance tool solutions.

Preemption questions and ethical AI use remain contentious. Should federal law preempt state AI regulations, or should states retain authority to impose stricter requirements? Industry groups favor preemption for consistency, while consumer advocates prefer state flexibility particularly on AI transparency rules and AI accountability standards that exceed federal baselines.

Key Takeaway: Preparing for Compliance in a Shifting Regulatory Environment

Organizations cannot wait for federal AI legislation. Regulatory expectations exist now through agency enforcement actions, even without explicit statutory mandates. Proactive governance provides a competitive advantage. Companies establishing robust AI oversight today adapt more easily to future regulations. Delay risks rushed compliance, enforcement actions, and reputational damage.

Essential preparation steps:

- Internal audits. Inventory AI systems across the organization. Assess risk levels, regulatory touchpoints, and compliance status.

- Transparency practices. Communicate clearly about AI use. Provide notice when AI influences decisions.

- Bias testing. Establish regular testing protocols across demographic categories. Document methodologies and remediation processes.

- Documentation standards. Maintain comprehensive records covering AI lifecycles from development through monitoring.

- Governance structures. Create cross-functional committees with legal, compliance, IT, and business representatives.

- Vendor management. Implement rigorous due diligence. Require transparency about algorithms and training data.

- Regulatory monitoring. Track federal guidance, state legislation, and enforcement actions from FDA, FTC, and HHS.

- Staff training. Educate employees about responsible AI principles and compliance obligations.

Organizations should consider responsible AI audits to validate governance frameworks. Independent audits identify gaps and demonstrate good-faith efforts to regulators.

References:

[1] Quinn Emanuel Urquhart & Sullivan, LLP. “When Machines Discriminate: The Rise of AI Bias Lawsuits.” https://www.quinnemanuel.com/the-firm/publications/when-machines-discriminate-the-rise-of-ai-bias-lawsuits/

[2] U.S. Food and Drug Administration. “Artificial Intelligence (AI) and Machine Learning (ML) Enabled Medical Devices.” https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-enabled-medical-devices

[3] Healthcare Financial Management Association. “Health System Adoption of AI Outpaces Internal Governance and Strategy.” https://www.hfma.org/press-releases/health-system-adoption-of-ai-outpaces-internal-governance-and-strategy/

[4] U.S. Food and Drug Administration. “Artificial Intelligence and Software as a Medical Device (SaMD).” https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-software-medical-device

[5] Federal Trade Commission. “FTC Announces Crackdown on Deceptive AI Claims and Schemes.” Published September 2024. https://www.ftc.gov/news-events/news/press-releases/2024/09/ftc-announces-crackdown-deceptive-ai-claims-schemes

[6] U.S. Department of Health and Human Services, Office for Civil Rights. “Enforcement Highlights – Current.” Updated November 21, 2024. https://www.hhs.gov/hipaa/for-professionals/compliance-enforcement/data/enforcement-highlights/index.html

[7] U.S. Department of Health and Human Services, Office for Civil Rights. “Covered Entities and Business Associates.” Updated August 21, 2024. https://www.hhs.gov/hipaa/for-professionals/covered-entities/index.html

[8] U.S. Equal Employment Opportunity Commission. “EEOC History: 2020 – 2024.” https://www.eeoc.gov/history/eeoc-history-2020-2024

[9] National Institute of Standards and Technology. “AI Risk Management Framework.” https://www.nist.gov/itl/ai-risk-management-framework

[10] U.S. Department of Justice, Civil Rights Division. “Artificial Intelligence and Civil Rights.” https://www.justice.gov/crt/ai

[11] U.S. Congress. H.R. 5511 – Algorithmic Accountability Act of 2025 (119th Congress, 2025–2026). https://www.congress.gov/bill/119th-congress/house-bill/5511

[12] U.S. Department of Health and Human Services. “Non-Discrimination in Health Programs and Activities.” Federal Register, May 6, 2024. https://www.federalregister.gov/documents/2024/05/06/2024-08711/nondiscrimination-in-health-programs-and-activities

FAQ

Is there a comprehensive federal AI law in the United States?

No, the United States does not have a single comprehensive federal AI law like the EU AI Act. Instead, AI is regulated through sector-specific rules administered by various federal agencies, AI executive orders (which can change between administrations), and existing laws applied to AI use cases.

Which federal agencies regulate AI in the United States?

Multiple federal agencies share AI oversight: the FDA regulates medical devices, HHS enforces HIPAA for healthcare data, the FTC monitors consumer protection and deceptive practices, the EEOC addresses employment discrimination, NIST develops technical standards, and the DOJ enforces civil rights laws. Each agency applies its existing statutory authority to AI within its domain.

What are the penalties for AI-related regulatory violations?

Penalties vary by violation type. HIPAA violations can result in fines up to $1.5 million per violation category annually. FTC enforcement actions can include millions in civil penalties plus corrective requirements. FDA violations may result in warning letters, product seizures, or injunctions. Employment discrimination cases can include back pay, compensatory damages, and punitive damages.

Do I need FDA approval for all healthcare AI systems?

Not all healthcare AI systems require FDA approval. Only AI that qualifies as a medical device, meaning it diagnoses, treats, cures, mitigates, or prevents disease, falls under FDA jurisdiction. Administrative AI tools, scheduling systems, and billing applications typically do not require FDA review. However, clinical decision support systems that provide specific treatment recommendations often do require authorization.

When does Colorado’s AI Act take effect and who does it apply to?

Colorado’s AI Act (SB 24-205) takes effect June 30, 2026. It applies to developers and deployers of “high-risk” AI systems that make or substantially factor into consequential decisions about healthcare, employment, education, financial services, housing, insurance, or legal services. Healthcare organizations using AI that significantly impacts patient care or access to services likely fall under this law’s requirements.

What is the FTC’s Operation AI Comply?

Operation AI Comply is an FTC enforcement initiative launched in September 2024 targeting companies that make deceptive claims about AI capabilities or use AI in unfair or deceptive ways. The FTC announced five initial enforcement actions, focusing on what it calls “AI washing” – false or misleading claims about AI functionality. This signals increased regulatory scrutiny of AI marketing and deployment practices.

How often should we audit our AI systems for compliance?

Audit frequency depends on risk level. High-risk AI systems affecting clinical decisions should undergo quarterly reviews. Moderate-risk systems may require semi-annual audits. Low-risk administrative AI can be reviewed annually. Any significant algorithm changes, performance drift, or regulatory updates should trigger immediate compliance review. Organizations should also conduct audits before major deployments and after any regulatory enforcement actions in their sector.

What is the AI Bill of Rights?

The Blueprint for an AI Bill of Rights is a non-binding framework released by the White House in October 2022. It identifies five principles: safe and effective systems, algorithmic discrimination protections, data privacy, notice and explanation, and human alternatives. While not legally enforceable, it influences agency rulemaking and sets expectations for responsible AI practices.

What documentation should we maintain for AI systems?

Maintain comprehensive records including: training data sources and characteristics, validation and testing results (including bias assessments across demographic groups), deployment parameters and limitations, human oversight protocols, performance monitoring data, security measures, incident reports, vendor contracts and Business Associate Agreements, and all regulatory submissions or correspondence. Documentation should cover the entire AI lifecycle from development through decommissioning and be readily accessible for regulatory inquiries.