State-level AI regulation in the United States is moving quickly, and Colorado has set one of the clearest early expectations for how high-risk AI should be governed in practice. SB24-205 marks a Colorado landmark in AI regulation, positioning the state as a key player in setting standards for high-risk AI governance. Colorado’s SB24-205, often referred to as the Colorado AI Act, establishes duties for both developers and deployers of high-risk artificial intelligence systems to use reasonable care to protect consumers from known or reasonably foreseeable risks of algorithmic discrimination. The law applies on and after February 1, 2026, so readiness is a near-term governance priority rather than a distant compliance item.

For most organizations, SB24-205 is best understood as an operational signal. It does not treat compliance as a single policy document. It points toward a repeatable governance program that can be explained, evidenced, and maintained over time, particularly through impact assessments, risk management practices, transparency and notice obligations, and recurring reviews. Colorado’s legislative summary makes this direction explicit by describing requirements such as deployer risk management programs, deployer impact assessments, and annual review of high-risk AI deployments to check for algorithmic discrimination.

This is also why many teams are shifting from ad hoc policy updates to a continuously maintained governance foundation. Pacific AI maintains a Governance Policy Suite that is updated as laws and standards evolve. The Q4 2025 release notes identify Colorado SB24-205 coverage as part of that update cycle, so teams can anchor their program in a single, unified set of policies and controls rather than rewriting internal standards each quarter.

What the Colorado AI Act is trying to prevent

SB24-205 is framed around consumer protection in scenarios where AI systems make, or substantially influence, consequential decisions about individuals. The core risk focus is algorithmic discrimination, and the law distributes responsibilities across both developers and deployers because discrimination risk is not confined to model design. Colorado AI regulation sets clear expectations for how high-risk AI should be governed, ensuring that developers and deployers follow strict guidelines to prevent algorithmic discrimination. It can be introduced through training data, threshold choices, post-processing rules, user interfaces, human override practices, or a deployment context that changes over time. Colorado’s bill summary also emphasizes operational mechanisms, including deployer notices to consumers when a high-risk AI system is used in consequential decisions, and processes related to correction and appeal, including human review when technically feasible.

In practical governance terms, the most important takeaway is that discrimination risk management is rarely solved by a single metric. It becomes manageable when the organization can show a lifecycle approach that connects system purpose, intended use, foreseeable use, assessment, mitigations, oversight, and monitoring.

High-risk AI under SB24-205 in practical terms

SB24-205 uses a risk-based framing centered on high-risk systems involved in consequential decisions concerning a consumer. In practice, the fastest way to scope exposure is to start with the decision workflow rather than the model. If a system’s output can materially influence outcomes such as employment, housing, lending and credit, insurance, education, healthcare access, or other essential services, it should be treated as high-risk until a documented rationale supports a different classification. This decision-first approach aligns with how the law is structured, because it focuses on consumer outcomes and governance obligations, including impact assessments and notices, not only technical design.

Developer duties and deployer duties

SB24-205 makes a meaningful distinction between developer and deployer responsibilities, and that split should be reflected in internal accountability. Colorado AI law (SB24-205) is designed to protect consumers from algorithmic discrimination, with strict requirements for both developers and deployers to ensure transparency and fairness. According to Colorado’s legislative materials and signed bill text, developers of high-risk AI systems are expected to use reasonable care and provide deployers with the information and documentation needed to complete impact assessments, along with required disclosures. The legislative summary also describes a reporting obligation when a developer discovers credible evidence that its system has caused, or is reasonably likely to cause, algorithmic discrimination, including notifying the Colorado Attorney General and known deployers within specified timeframes.

Deployers are responsible for how the system is used in real decision-making contexts. Colorado’s legislative summary describes deployer duties that include implementing a risk management policy and program, completing an impact assessment, reviewing deployments annually, notifying consumers when a high-risk AI system is used for consequential decisions, and enabling consumers to correct incorrect personal data and appeal adverse consequential decisions, including human review where technically feasible.

For organizations that both develop and deploy, the most defensible posture is to treat developer documentation and deployer governance as two linked parts of one evidence trail. That evidence trail should connect system documentation to the deployer’s impact assessment, to mitigations and oversight decisions, and then to monitoring and periodic review.

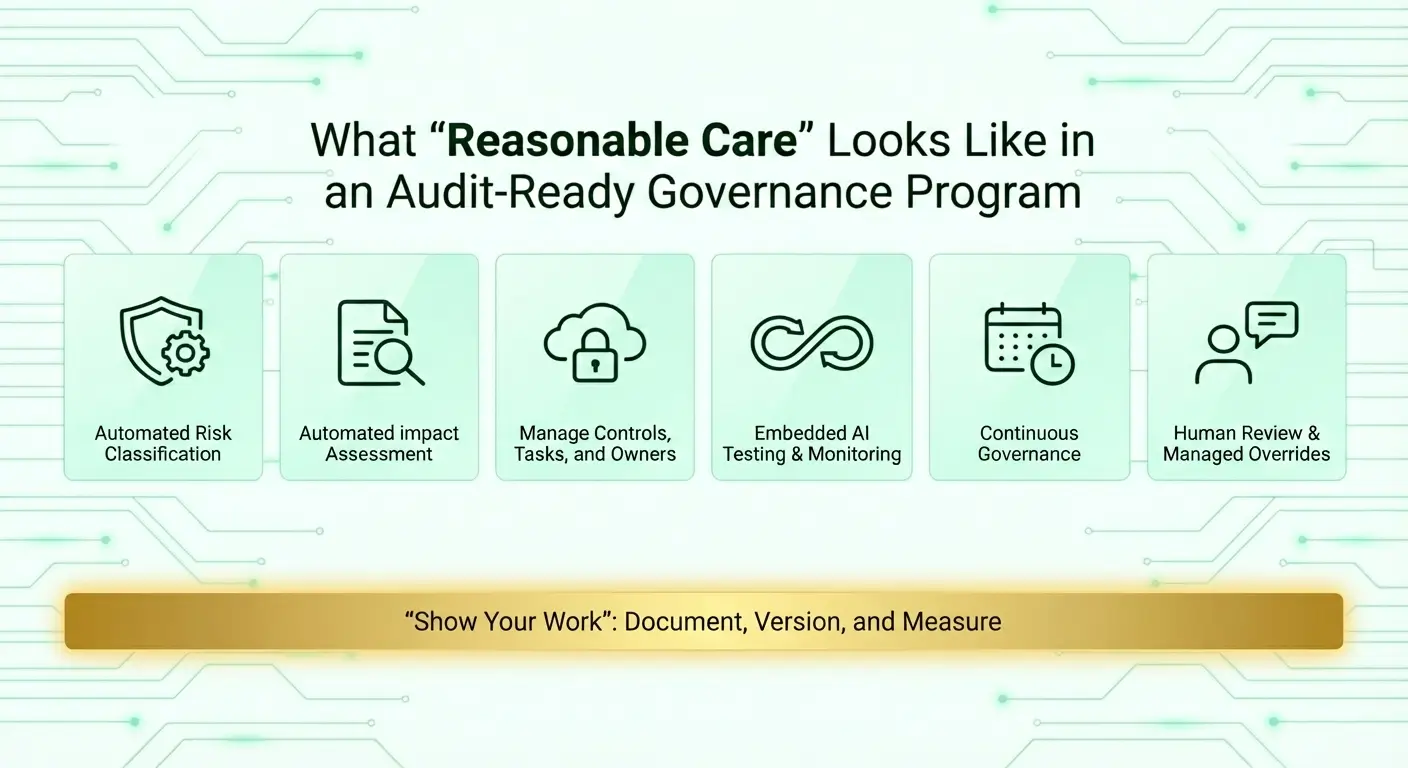

What “reasonable care” looks like in an audit-ready governance program

“Reasonable care” can sound abstract until it is translated into governance behaviors that an organization can demonstrate with records. SB24-205’s design is instructive here. It creates a rebuttable presumption mechanism and ties duties to operational processes such as impact assessments, annual reviews, and deployer risk management programs. These features strongly signal that Colorado expects organizations to be able to show what they did, when they did it, and how they sustain oversight over time.

In practice, reasonable care tends to be provable when an organization can consistently answer the following questions:

- Can the organization show where high-risk AI exists and explain why each system is classified as high-risk, including the consequential decision points it influences?

- Can the organization produce an impact assessment that reflects the actual deployment context, including foreseeable discrimination-related risk pathways?

- Can the organization show what controls were selected in response to identified risks, who approved them, and how changes and exceptions are governed?

- Can the organization demonstrate ongoing monitoring and a review cadence that can detect new risks as data, populations, or use cases change, including reassessment triggers?

- Can the organization demonstrate consumer-facing operational steps where required, including notices and pathways for correction and appeal, with human review where technically feasible?

This is the difference between a compliance document and an operating program.

A practical implementation path that supports SB24-205 readiness

Teams tend to move faster when SB24-205 readiness is implemented as a repeatable governance workflow. It usually starts with mapping where AI is used in consequential decisions and documenting whether the system output is used directly or is a substantial factor in the decision. Once systems are scoped, impact assessments should reflect the deployer’s real thresholds, business rules, user experience, and human oversight practices, because these implementation details can materially affect discrimination risk.

From there, controls should be formalized as operating procedures. That typically includes an evaluation cadence, approval gates for changes, escalation paths for discrimination-related signals, and documented responsibilities across product, legal, compliance, and technical owners. Where systems are sourced externally, vendor governance becomes central because deployers still need enough documentation from developers to complete impact assessments and perform oversight. The developer and deployer split in SB24-205 is designed to make those dependencies explicit, and to reduce the likelihood that deployers adopt high-risk systems without the ability to demonstrate reasonable care.

Documentation and evidence that tends to matter most

Colorado’s legislative materials repeatedly point toward documentation that connects risk identification, assessment, mitigation, and ongoing review. In operational terms, organizations are generally best served by maintaining a consistent evidence pack that can be updated as systems and contexts change. This usually includes an AI system register entry with a documented high-risk rationale, an impact assessment record, documentation of evaluation methods and results, a record of mitigations and residual risk acceptance, governance approvals and decision logs, and a monitoring plan with reassessment triggers. Where required, it also includes documentation showing how consumer notices, correction mechanisms, and appeal workflows are implemented and governed.

Federal landscape watch and why governance work should continue

On December 11, 2025, the White House issued an Executive Order titled “Ensuring a National Policy Framework for Artificial Intelligence,” which expresses a preference for a minimally burdensome national framework and calls for actions that may challenge state AI laws that conflict with federal policy. Several legal analyses also note the order’s direction to establish a litigation task force focused on state-law challenges.

For governance teams, the practical response is to monitor developments while continuing implementation. Core governance controls such as inventory, impact assessments, documentation, monitoring, accountability, and vendor oversight remain valuable across frameworks even when regulatory dynamics shift.

Automating Compliance at Scale on the Pacific AI Platform

Compliance with the Colorado AI Act can require a significant effort across the AI development lifecycle – from risk classification and impact assessment of each proposed project, to implementing testing & monitoring while developing a system, to ongoing tracking of controls. The Pacific AI platform is designed to alleviate the majority of this overhead by automating it away, while ensuring state-of-the-art levels of governance and safety:

- Automated risk classification and risk assessment

- Continuous Governance – governance is not a one-time action, just like the Colorado law states, but requires you stay up to date. Updating the policy suite with regular updates, included, whenever a new regulation passes or a new risk is uncovered, the suite and the platform will reflect it.

- Testing & Monitoring is an integral part of the platform – in contrast to having testing tools or LLM evaluation tools or bias testing done separately from the governance suite, Pacific AI is an end-to-end platform that brings together lawyers, compliance officers, software developers, data scientists, and DevOps operators – so that testing and monitoring happens within the same platform, meaning that it is tracked together, making it far easier to prove compliance from daily operations.

How the Pacific AI Platform Operationalizes SB24-205 Compliance

True compliance with the Colorado AI Act requires moving beyond static documents to a technical foundation that can build, operate, and govern AI systems in real-time.

The Pacific AI platform provides the automated “proof of reasonable care” that regulators demand.

Through Pacific AI Governor, organizations can automate the most complex requirements of SB24-205, including recurring impact assessments and the generation of SB35-compliant model code. This ensures that governance is an active part of the development lifecycle, providing auditors with a clear, version-controlled trail of compliance.

Once deployed, Pacific AI Guardian provides the continuous monitoring necessary to prevent algorithmic discrimination before it impacts consumers. By integrating these capabilities into a single platform, Pacific AI transforms governance from a manual hurdle into a scalable operational advantage.

Pacific AI is the system of record for regulated AI, making it far easier to build and operate well-governed AI systems – and to prove to regulators and auditors that this happens.

Book a demo to see how Pacific AI automates Colorado AI Act compliance.