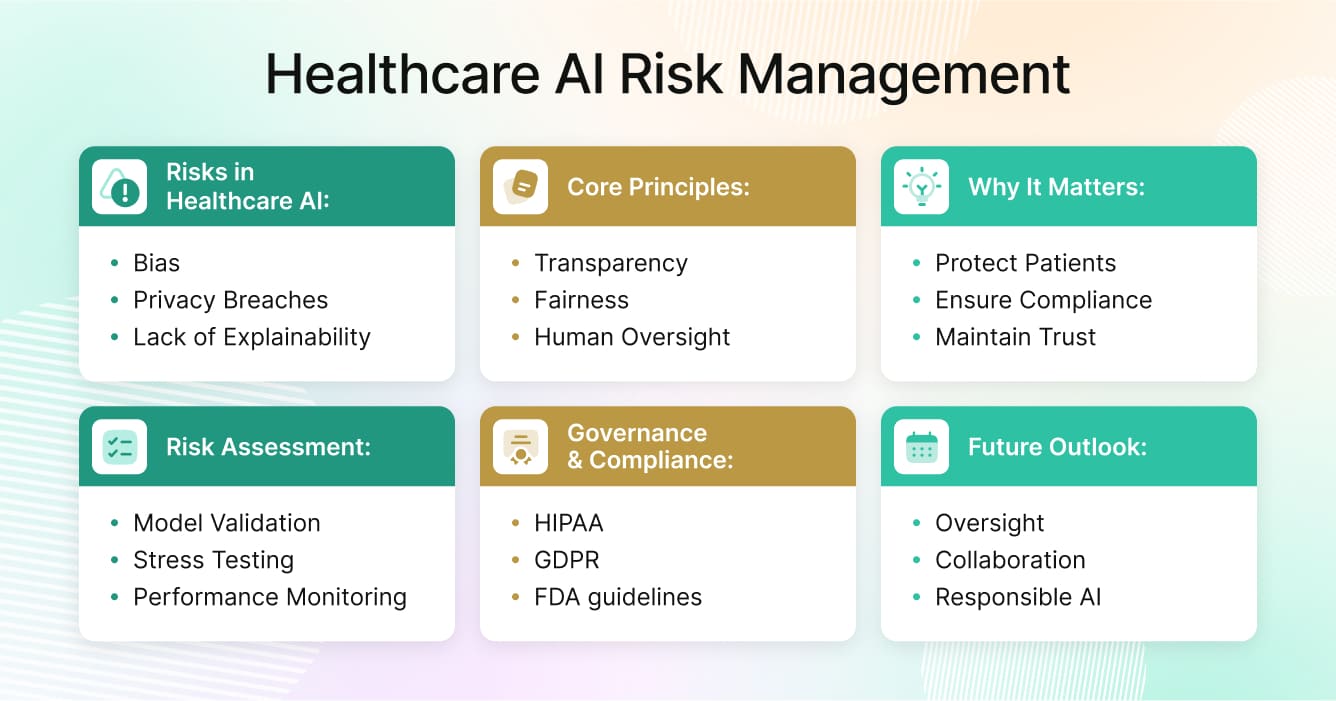

Artificial intelligence has become an essential part of modern healthcare. From clinical decision support and radiology to predictive analytics and administrative efficiency, AI systems are reshaping how hospitals, insurers, and life sciences organizations operate. But the promise of healthcare AI also comes with responsibility. When algorithms make or inform medical decisions, the consequences of bias, error, or data misuse can directly affect patient outcomes. That’s why robust healthcare AI risk management is not optional—it’s fundamental to safe and ethical deployment.

What Are the Risks of AI in Healthcare

AI offers speed and scalability, but it can also introduce new forms of risk that traditional quality assurance processes were never designed to handle. The key challenge is balancing innovation with safety.

Algorithmic bias and fairness issues

AI models learn from historical data, and healthcare data often reflects long-standing inequities. When training datasets underrepresent certain populations, algorithms may produce less accurate results for those groups. For example, diagnostic models built primarily on data from Western populations have shown poorer performance on patients from underrepresented ethnic groups. This can lead to unequal treatment recommendations, reinforcing disparities rather than reducing them.

Lack of explainability and clinical transparency

Many advanced AI systems, especially deep learning models, produce results without clear explanations. Clinicians may be presented with a diagnosis or risk score but have no insight into the reasoning behind it. This “black box” effect undermines accountability and makes it difficult to validate whether model outputs align with medical standards. When clinicians cannot interpret AI outputs confidently, trust erodes.

Data privacy and security concerns

AI systems in healthcare require access to large volumes of sensitive data. Whether for model training, fine-tuning, or deployment, this often involves cross-border data transfers and integration of third-party platforms. Each additional connection increases exposure to privacy breaches or cyberattacks. A single data leak can trigger costly penalties under HIPAA or GDPR and damage patient confidence in digital health initiatives.

Model drift and performance degradation

AI models are not static. They evolve over time as new data patterns emerge. A model that performs well today may produce inaccurate predictions tomorrow if disease prevalence, population behavior, or healthcare delivery models change. Without continuous monitoring and regular audits, organizations risk using outdated algorithms that no longer reflect reality.

AI in healthcare requires vigilant oversight. Continuous monitoring, transparent documentation, and periodic audits ensure that algorithms remain safe, accurate, and aligned with evolving standards for responsible AI oversight.

Why AI Risk Management in Healthcare Matters

Managing healthcare AI risk management is about more than compliance—it’s about protecting patients, maintaining trust, and ensuring that innovation benefits everyone. Healthcare organizations operate under a level of scrutiny few other industries face. Errors in AI can translate into misdiagnoses, patient harm, or regulatory violations.

Protecting patients and ensuring safety

Every healthcare AI system should start with a single guiding principle: patient safety. Unintended errors from a predictive model can have serious clinical consequences. A mislabeled scan, an incorrect triage score, or an inaccurate medication recommendation can endanger lives. Effective risk management processes help detect these issues before they cause harm.

Meeting regulatory and legal requirements

AI in healthcare operates under an expanding web of regulations. In the United States, HIPAA governs data privacy, while the FDA provides guidance on Software as a Medical Device (SaMD). In Europe, GDPR and the Medical Device Regulation (MDR) require transparency, explainability, and data protection. Aligning with these frameworks through strong governance policies not only avoids penalties but also provides legal defensibility if systems are challenged in court.

Maintaining trust in healthcare AI

AI adoption in medicine depends on trust. Patients and clinicians must believe that algorithms are fair, transparent, and reliable. Public confidence in healthcare AI grows when organizations demonstrate accountability through regular audits, open communication, and clear documentation of how systems are tested and validated.

Risk management builds this trust by showing that AI is not replacing human judgment but strengthening it.

Core Principles for Managing AI Risk in Healthcare

Healthcare leaders can’t eliminate risk entirely, but they can reduce it significantly through strong healthcare AI risk management and governance grounded in clear ethical principles. Four foundational pillars guide responsible AI management: transparency, fairness, accountability, and human oversight.

Transparency ensures that clinicians, regulators, and patients understand how AI systems function. This involves documenting the data sources used for training, disclosing potential limitations, and providing interpretable outputs. Explainability tools such as SHAP and LIME can help visualize which features drive an AI’s decision, making it easier for medical professionals to validate or challenge results.

Fairness demands that AI systems perform equally well across diverse populations. Continuous testing across gender, ethnicity, and age groups is critical. When bias is detected, retraining with more representative data and adjusting weighting algorithms can restore balance.

Accountability requires identifying clear ownership at every stage of the model lifecycle. Data scientists, compliance officers, and clinical leads each play a role in documenting decisions and ensuring traceability from development to deployment.

Human oversight must remain a non-negotiable part of healthcare AI risk management. While algorithms can assist in diagnosis or treatment planning, clinicians must retain final authority. Human-in-the-loop governance prevents overreliance on automation and preserves professional responsibility.

To understand how these principles connect to broader governance strategies, see Pacific AI’s article on AI healthcare policy.

Risk Assessment Techniques for AI in Clinical Use

Assessing AI risk in healthcare requires both technical rigor and clinical judgment. Before an AI system is approved for use in a hospital or research setting, it must go through multiple layers of testing and validation.

Clinical model validation is a critical component of healthcare AI risk management, involving confirmation that the AI performs consistently across datasets. This includes internal validation (using held-out data from the original dataset) and external validation (testing on entirely new data). Models that perform well in controlled environments often fail in real-world conditions if they haven’t been validated externally.

Scenario-based stress testing helps simulate unusual conditions, such as incomplete data, conflicting sensor readings, or variations in disease patterns. By exposing models to extreme scenarios, healthcare organizations can identify vulnerabilities before they become systemic problems.

Continuous performance monitoring ensures models remain reliable after deployment. Tracking precision, recall, and false-positive rates helps identify early signs of drift. A governance dashboard can alert teams when thresholds are exceeded, triggering retraining or rollback procedures.

Human-in-the-loop auditing is another best practice. Involving both clinicians and data scientists ensures that model behavior aligns with medical logic and ethical standards. Combining quantitative metrics with qualitative review creates a more holistic approach to auditing AI systems.

Governance and Compliance in AI Risk Management

Strong governance frameworks form the backbone of responsible AI. Effective policies integrate ethical principles, operational controls, and regulatory compliance.

International regulations such as HIPAA and GDPR mandate secure handling of health data, forming a key part of healthcare AI risk management. The U.S. FDA and the European Medicines Agency classify many AI-based tools as medical devices, requiring documentation of how algorithms are trained, tested, and updated. Adherence to these standards ensures not only safety but also global interoperability.

Internal governance policies should define approval workflows for AI deployment, assign risk ownership, and specify documentation requirements. Every stage—from data ingestion to model retirement—should have an accountable lead. Routine risk assessments and model audits should be embedded within the organization’s existing quality management system.

Responsible deployment means more than legal compliance. It involves regular communication with end users, feedback loops for clinicians, and continuous evaluation of social and ethical impact. Organizations that treat AI governance as a living process, rather than a one-time project, are better equipped to handle future regulatory updates and evolving technologies.

For a detailed exploration of governance frameworks, visit Pacific AI’s AI governance resource.

Case Examples and Mitigation Lessons

Real-world examples illustrate how failures can occur—and how structured risk management prevents recurrence.

Case 1: Diagnostic bias in imaging

A hospital implemented an AI system for detecting lung abnormalities, only to discover that accuracy dropped significantly for certain patient groups. The root cause was a lack of diversity in the training dataset. The mitigation strategy involved retraining with global datasets and adding fairness testing before deployment. Regular audits are now mandatory before any diagnostic model goes live.

Case 2: Model drift in readmission prediction

A predictive algorithm designed to estimate patient readmission risk began producing inflated scores after major demographic changes during the COVID-19 pandemic. Continuous monitoring detected the drift, prompting retraining with updated data. This incident reinforced the need for ongoing validation rather than static certification.

Case 3: Data privacy exposure

An AI model designed to summarize clinician notes accidentally included personal identifiers in outputs shared with third parties. The breach highlighted the need for automated redaction and better data access controls. The hospital introduced stricter data handling policies and multi-level review for all outputs generated by language models.

Each of these examples underscores one principle: prevention is possible only through proactive auditing and open reporting. Transparency in failure builds resilience and regulatory confidence.

Tools and Frameworks for AI Risk Mitigation

Managing AI risk requires more than policies—it requires the right tools.

Bias detection and fairness analysis tools such as Fairlearn, Aequitas, SHAP, and LIME help detect where models behave inconsistently across patient subgroups. Integrating these tools early in development ensures bias is addressed before deployment.

Model monitoring platforms like WhyLabs, Arize, or Weights & Biases allow teams to visualize model performance in real time. They can automatically alert users when predictions deviate from expected patterns or when new data introduces unseen variables.

Governance frameworks and standards provide the organizational foundation for ethical AI. Frameworks such as the NIST AI Risk Management Framework, ISO/IEC 42001, and the Coalition for Health AI (CHAI) guidelines outline how to classify risks, document lifecycle events, and manage accountability. These standards align technical validation with legal and ethical oversight.

Testing and evaluation environments are another critical component. Before an AI system is deployed in a live clinical setting, it should undergo rigorous scenario testing. Pacific AI’s Testing solutions provide structured evaluation workflows to ensure generative and predictive models meet safety requirements before going live.

By combining these tools and frameworks, healthcare organizations can implement a full lifecycle approach—from design and training to deployment and monitoring—that keeps patient safety and regulatory compliance at the center.

Conclusion: Building a Safer AI Future in Healthcare

AI will continue to shape the future of healthcare. From predictive diagnostics to administrative automation, its benefits are undeniable. But with innovation comes responsibility. Healthcare AI risk management is about ensuring that progress does not come at the expense of safety, fairness, or trust.

Organizations that integrate ethical principles, strong governance, and continuous oversight into their AI workflows will be the ones that define the future of trustworthy healthcare technology. Achieving this requires collaboration among data scientists, clinicians, regulators, and patients—all working toward the same goal: safe and equitable use of AI.

A safer AI future in healthcare will depend on continuous auditing, transparency, and human-centered design. By embedding these practices into the heart of every AI initiative, the industry can move forward with confidence, knowing that innovation and accountability can thrive together.