Artificial intelligence is no longer confined to research labs or data science departments. It now guides clinical diagnoses, approves credit applications, supports legal reviews, and powers global supply chains. Yet, as AI becomes embedded in decisions that affect millions of lives, one fundamental question continues to surface: what is AI transparency and do we really understand how these systems work?

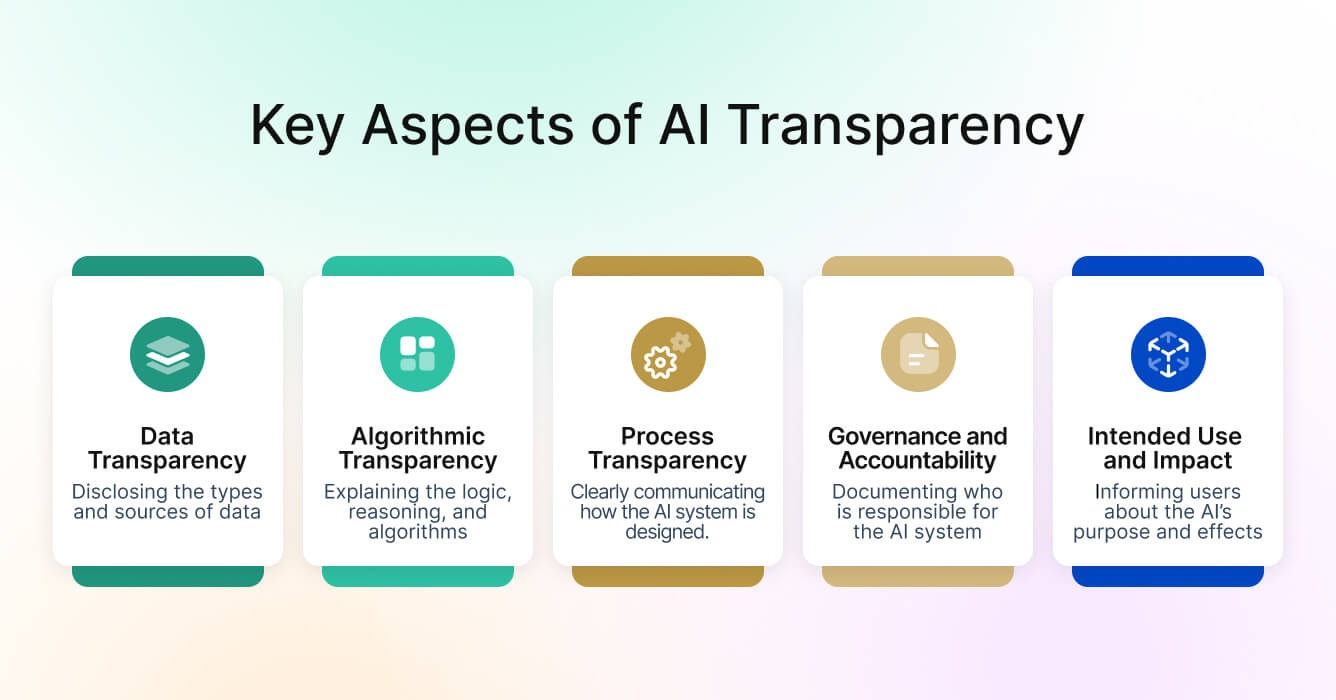

This question lies at the heart of AI transparency—the practice of making artificial intelligence systems understandable, explainable, and traceable. Transparency means that every stage of an AI system, from data collection to decision-making, can be interpreted and reviewed by humans. It is the foundation of responsible AI governance, ensuring that systems operate ethically, comply with regulations, and can be trusted by both experts and the public.

As the complexity of AI grows, transparency is what allows organizations to balance innovation with accountability. It gives regulators the visibility they need to safeguard the public interest, and it empowers users to understand and trust the technology that increasingly shapes their daily lives.

Why Transparency Is Essential in Artificial Intelligence Systems

Transparency Builds the Foundation of Trust

Trust is the cornerstone of successful AI adoption. People are more likely to accept AI-driven recommendations when they understand how and why those decisions are made. In healthcare, for instance, a clinician needs to know how an AI arrived at a diagnosis before relying on its recommendation. In finance, a loan applicant has a right to know why an algorithm approved or rejected their application.

A transparent AI system allows stakeholders to verify these decisions. By documenting data sources, explaining model logic, and clarifying limitations, organizations strengthen trust and credibility. Transparency is not just good ethics—it is good business.

Transparency Enables Accountability and Ethical Integrity

AI transparency ensures that responsibility does not vanish inside a black box. When models are traceable and decisions are documented, accountability becomes enforceable. If an AI system causes harm, developers and regulators can follow the evidence trail to understand what went wrong and why. This visibility prevents misuse, promotes fairness, and upholds the ethical integrity of organizations that deploy AI at scale.

Transparency Helps Identify and Reduce Bias

Bias in AI remains one of the most pressing governance challenges. Hidden bias in datasets or model design can lead to unfair outcomes for specific groups. Transparent systems make it easier to uncover and address these issues early.

Pacific AI’s analysis, Unveiling Bias in Language Models: Gender, Race, Disability, and Socioeconomic Perspectives, demonstrates how documenting data provenance and testing model fairness are crucial steps in eliminating bias. Transparency turns ethical AI from an aspiration into a measurable process.

Transparency Strengthens System Safety and Performance

Transparent systems are easier to audit, test, and monitor. When developers can explain why an AI produces a certain result, they can also predict how it might behave under different conditions. This explainability makes it possible to detect performance drift or unsafe outcomes before they reach the real world.

In regulated sectors such as healthcare, this level of oversight is essential. Safety depends not only on model accuracy but also on the human ability to understand how and when the system might fail. Transparency ensures that AI remains a partner, not a mystery.

AI Transparency Regulations and Global Governance Frameworks

Governments and standard-setting organizations are now embedding transparency directly into law. It is becoming a central pillar of AI regulation worldwide, defining what it means for systems to be accountable, auditable, and safe.

The EU AI Act and Its Role in Transparency

The European Union’s AI Act is the first major regulation to formalize transparency obligations for artificial intelligence. It requires that all high-risk AI systems disclose detailed information about their function, purpose, and limitations.

Developers must provide documentation on:

- The datasets used in training and validation

- The intended scope and environment for deployment

- Human oversight mechanisms

- Post-market monitoring procedures

- Risk mitigation and bias detection methods

The Act also mandates user awareness. Whenever individuals interact with AI—such as chatbots, decision-making assistants, or automated scoring systems—they must be informed that they are engaging with an artificial intelligence. This level of openness makes artificial intelligence transparent and accountable in ways that earlier technologies were not.

The EU AI Act has effectively set the global benchmark, inspiring similar legislation in Canada, Brazil, Japan, and the United States.

Global Frameworks Promoting Transparency

Several international bodies have already established transparency as a defining principle of ethical AI:

- The NIST AI Risk Management Framework (United States) emphasizes traceability and explainability across the model lifecycle.

- The OECD AI Principles highlight transparency and responsible disclosure as prerequisites for accountability.

- UNESCO’s Ethical AI Recommendations position transparency as essential to human rights, safety, and non-discrimination.

These frameworks, alongside emerging standards like ISO/IEC 42001, give organizations a global reference point for aligning AI transparency with compliance.

Pacific AI’s Role in Operationalizing Transparency

Pacific AI’s Q3 2025 Governance Suite turns these principles into practice. Covering more than 250 international AI laws, standards, and frameworks, it helps organizations implement transparency across design, deployment, and monitoring.

The latest release introduces:

- A Transparency Compliance Map that cross-references global obligations under the EU AI Act, NIST RMF, ISO/IEC 42001, and local data protection laws.

- A Model Documentation Generator that automatically structures transparency reports, including data lineage, explainability methods, and ethical safeguards.

- Live Transparency Dashboards that provide continuous oversight and automated audit logs for accountability.

This suite is a practical answer to one of AI’s hardest challenges: translating abstract transparency principles into daily operational routines.

Best Practices for Implementing AI Transparency

Transparency begins not at deployment but at design. Building truly transparent AI requires technical precision, ethical commitment, and clear communication across every layer of development.

Integrate Transparency into System Architecture

Transparency should be a design goal, not an afterthought. During the architecture phase, developers must document data inputs, model logic, assumptions, and decision boundaries. A transparent design allows teams to understand the implications of every modification and update throughout the model’s lifecycle.

Document with Clarity and Structure

Documentation is the foundation of transparency. Each system should maintain detailed records that describe its objectives, datasets, algorithms, evaluation metrics, and risks. The documentation should be readable to technical experts yet clear enough for compliance teams and regulators.

Elements of Effective Transparency Documentation

- Purpose and intended scope of the AI system

- Data sources, selection criteria, and quality controls

- Known biases and limitations

- Explanation of algorithms and interpretability methods

- Human oversight mechanisms and escalation paths

- Governance structure and responsible parties

- Testing history, retraining schedules, and performance benchmarks

Organizations can streamline this process using Pacific AI’s AI Compliance Certification Tool, which provides pre-structured documentation templates aligned with ISO/IEC and NIST frameworks.

Use Explainability Tools and Transparent Models

Explainability translates technical reasoning into human understanding. Methods like SHAP and LIME visualize how input features influence predictions, allowing developers and auditors to see which variables drive decisions. Transparent AI systems also include model cards and data sheets that describe training data, performance metrics, and contextual limitations.

Ensure Human Oversight and Governance

Transparency and human judgment go hand in hand. Regular audits, ethics committees, and AI governance boards ensure that decisions remain accountable. Humans must have the authority to override or suspend models that show signs of drift, bias, or safety risks.

Communicate Transparency to Stakeholders

Transparency achieves its full purpose only when information is effectively shared. Organizations should adopt communication strategies that make technical details understandable to all audiences—executives, regulators, clients, and the public.

Practical Examples of Transparency Communication

- Transparency summaries that explain system purpose and logic in plain language

- Model transparency dashboards that display real-time performance and fairness metrics

- Public transparency reports that show how AI systems comply with governance standards

- Internal transparency briefings to train employees and ensure consistent understanding

For healthcare and life sciences applications, Pacific AI’s Generative AI Governance in Healthcare framework provides practical examples of how transparency supports clinical safety and patient trust.

Common Challenges in Achieving Transparency at Scale

Achieving transparency in AI is not easy. It involves technical, legal, and cultural challenges that require careful navigation.

The Complexity of Modern AI Models

Deep learning systems can contain billions of parameters and non-linear interactions that make full interpretability impossible. Simplified explanations can sometimes obscure nuance, while overly technical details can overwhelm users. The challenge is balancing clarity with accuracy.

Intellectual Property and Competitive Concerns

Organizations fear that revealing model details could compromise proprietary knowledge. Transparency initiatives must therefore differentiate between essential accountability information and confidential trade secrets, ensuring both openness and protection.

Data Privacy and Regulation

Transparency requires sharing information about how data is used, but privacy laws like GDPR limit what can be disclosed. Compliance teams must collaborate with data protection officers to balance transparency with legal confidentiality.

Fragmented Global Standards

Each jurisdiction defines transparency differently. Some focus on algorithmic disclosure, others on outcome explanation. Pacific AI’s Governance Suite simplifies this by harmonizing obligations from multiple jurisdictions into one unified policy framework.

Organizational Readiness and Resources

Maintaining transparency requires skilled personnel, automation tools, and sustained leadership support. Organizations that treat transparency as a strategic investment, rather than a compliance burden, are the ones most likely to succeed.

Key Insights and Expert Takeaways

1. AI Transparency Is the Cornerstone of Trustworthy AI

Transparency ensures that artificial intelligence remains understandable and aligned with human values. It transforms algorithms from opaque systems into auditable, accountable tools that people can rely on.

2. Global Regulations Are Making Transparency Mandatory

The EU AI Act and international frameworks like NIST, OECD, and UNESCO are institutionalizing transparency as a legal requirement. Compliance is no longer optional; it is the cost of participation in the global AI ecosystem.

3. Documentation and Explainability Define Responsible AI Governance

Transparent systems are built on clear documentation, explainable models, and regular auditing. These elements protect organizations from risk while promoting fairness and accountability.

4. Pacific AI Leads in Operationalizing Transparency

With its Q3 2025 Governance Suite, Pacific AI provides the first global framework that integrates transparency across laws, sectors, and AI maturity levels. It enables real-time compliance and helps organizations demonstrate responsibility with measurable proof.

5. Transparency Is the Future of Sustainable AI Innovation

As AI becomes more agentic and autonomous, transparency will be the principle that ensures continued human oversight. It is the foundation of ethical AI management and the safeguard that allows innovation to scale safely.

Conclusion: Building a Transparent and Trustworthy AI Future

Transparency is not only an ethical choice—it is the foundation of responsible progress. In an era when AI systems influence financial markets, healthcare outcomes, and public policy, the ability to explain and justify automated decisions defines the difference between trust and skepticism. Understanding what is AI transparency is essential to building that trust.

By embedding transparency into every stage of the AI lifecycle, organizations protect both their stakeholders and their reputation. They build systems that are not just efficient but also accountable, fair, and aligned with human expectations.

The future of AI depends on transparency. And that future is already here.

To implement transparency across your organization, download the Pacific AI Governance Suite Q3 2025 release, which consolidates transparency, auditability, and compliance frameworks from over 30 countries into one unified system. It’s the most practical way to make transparency actionable, measurable, and continuous.

For a practical assessment of how AI transparency and governance principles are applied in real-world systems, explore the Quizzes & Surveys section to benchmark your organization’s current maturity.

Explore the Pacific AI Governance Suite and start building trust through transparent and responsible AI today.