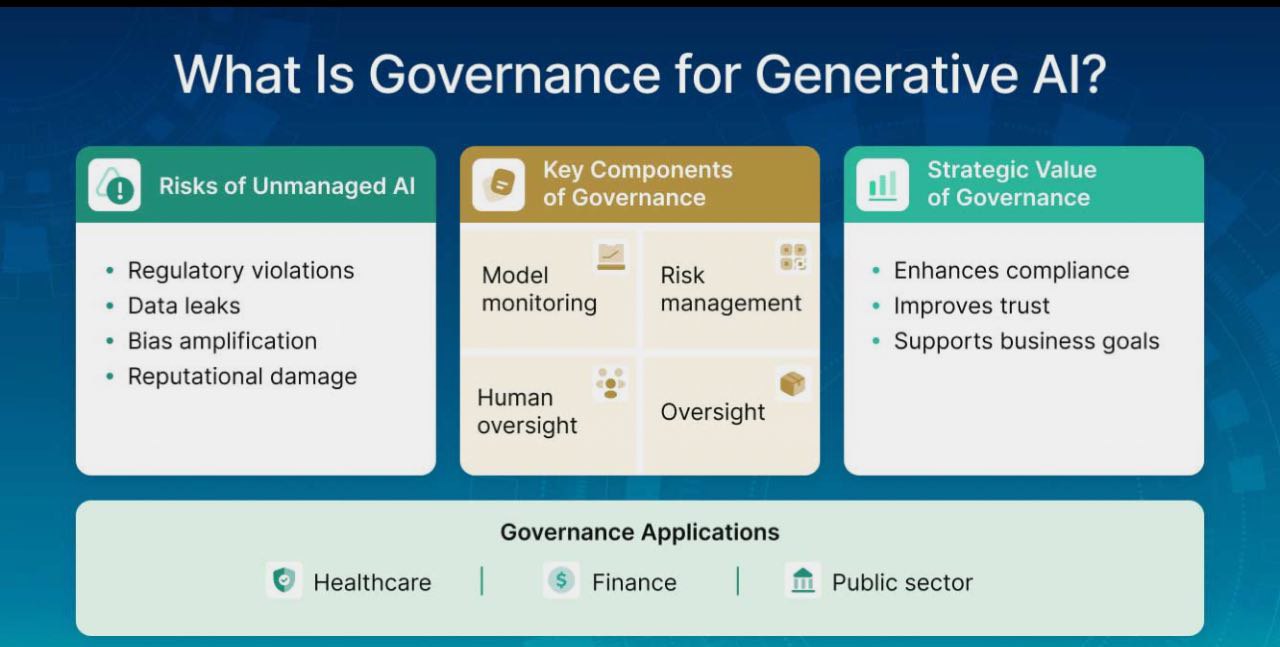

As generative AI reshapes how organizations operate, innovate, and communicate, the need for rigorous governance becomes urgent. Generative AI governance refers to the strategic oversight of AI systems that generate content, insights, or decisions using complex models such as large language models (LLMs). This form of governance ensures AI is deployed ethically, transparently, and in compliance with relevant laws and organizational policies. Without it, businesses risk regulatory violations, data leaks, bias amplification, and reputational harm. This article explores why governance tailored for generative AI is essential, the challenges of unmanaged systems, core components of effective governance, real-world applications, and a step-by-step strategy for implementation. Organizations, especially in healthcare, finance, and the public sector, can use this guide to develop governance programs that turn AI risk into strategic advantage.

Introduction: Why Generative AI Demands a New Approach to Governance

The rise of generative AI represents a fundamental shift in how organizations engage with artificial intelligence. Unlike traditional software or even predictive AI systems, generative models such as GPT-4 and Stable Diffusion produce original outputs based on probabilistic reasoning. These outputs are often untraceable, unpredictable, and potentially problematic.

Conventional IT governance frameworks are ill-equipped to manage this unpredictability. While they handle access control, change management, and incident response, they lack the ethical, legal, and contextual oversight generative AI requires. This article builds a comprehensive foundation for understanding and implementing governance frameworks specifically designed for generative tools.

Why Generative AI Requires Specialized Governance

Generative AI tools differ markedly from traditional software systems:

- Outputs Are Unpredictable: Even with the same prompt, outputs can vary dramatically.

- Risk of Hallucination: Generative models may produce false or misleading information that appears credible.

- Sensitive Data Exposure: Prompt injections and model leaks can inadvertently expose private or regulated data.

- Bias and Fairness Issues: Models trained on biased data can perpetuate or amplify existing societal biases.

Without dedicated governance:

- Legal and compliance risks multiply.

- Trust in AI outputs erodes.

- Operational inefficiencies arise from inconsistent tool usage.

In short, generative AI demands governance that is adaptive, proactive, and deeply integrated with organizational ethics and policy.

Common Challenges of Ungoverned Generative AI

Organizations adopting generative AI without a structured governance framework often encounter a range of operational and ethical challenges that can escalate quickly and disrupt business continuity. One of the most pressing issues is the lack of traceability in AI-generated outputs. Because these models do not reference specific sources in their outputs, it becomes extremely difficult to verify the origin of the content or audit the decision-making process. This absence of provenance undermines accountability and complicates compliance with regulatory standards that require explainability.

Closely tied to this is the issue of content quality. Generative AI can produce outputs that are not only incorrect but potentially inappropriate or offensive. These content failures risk tarnishing a brand’s reputation, especially if the materials violate internal standards or fall afoul of industry regulations. Without guardrails, what begins as an innocuous content generation task can quickly escalate into a public relations or legal crisis.

Data and privacy violations represent another high-stakes risk. Generative models may inadvertently expose personally identifiable information (PII) or protected health information (PHI), especially when prompts or training data are poorly controlled. In regulated sectors such as healthcare or finance, even a minor lapse can trigger significant penalties and damage stakeholder trust.

Compounding these issues is the potential for reputational harm. AI-generated errors or controversial outputs, particularly when circulated publicly or within critical workflows, can attract media scrutiny and erode user confidence. Organizations that cannot demonstrate robust oversight mechanisms are more vulnerable to backlash, both from customers and regulators.

Finally, a decentralized or ad hoc approach to governance often leads to fragmented oversight. Different teams may adopt their own tools and standards, resulting in inconsistencies, duplicated effort, and increased exposure to unmanaged risk. Without a cohesive strategy, the organization cannot ensure that AI usage aligns with its broader mission, values, and compliance obligations.

These interconnected risks highlight the critical importance of developing a comprehensive and scalable governance strategy that embeds accountability, quality assurance, and regulatory alignment into every phase of generative AI deployment.

Key Components of Generative AI Governance

Key Components of Generative AI Governance

A robust governance framework includes several foundational elements:

Model Monitoring

Track model performance, behavioral drift, and output accuracy over time. Monitoring helps detect anomalies early and assess fitness-for-purpose.

Ethical Use Policies

Clearly define acceptable and prohibited uses of generative AI. Align these policies with organizational values, legal constraints, and industry standards.

Risk Management

Use structured frameworks to identify, rank, and mitigate operational, technical, and reputational risks. Incorporate incident response protocols for AI-related events.

Human Oversight and Review

Introduce human-in-the-loop workflows for sensitive or high-impact use cases. Governance boards or review panels can vet new deployments.

Audit Trails and Documentation

Log prompts, model responses, user interventions, and rationale behind decisions. This ensures transparency, accountability, and compliance readiness.

Governance platforms, explainability tools, and AI testing suites that provide structure and repeatability often support these elements.

Governance in Action: Sector-Specific Applications

Organizations across industries are embedding governance into generative AI workflows to unlock benefits while controlling risk:

Healthcare

Hospitals use governance tools to ensure AI-generated clinical content meets standards of care and privacy regulations. Tools validate outputs before clinicians see them, safeguarding both quality and liability. See real cases from John Snow Labs.

Finance

Risk and compliance teams implement model explainability, bias checks, and detailed audit logs to satisfy regulators and internal control functions.

Marketing and Communications

Content teams deploy prompt engineering, filtering tools, and brand alignment checks to maintain voice consistency and avoid legal pitfalls.

Public Sector

Government agencies use governance platforms to vet public-facing content and ensure alignment with transparency laws and public trust mandates.

These examples demonstrate that governance is not a blocker but an enabler of scalable, responsible innovation.

The Strategic Value of Scalable AI Governance

As organizations scale their use of generative AI, a cohesive governance program becomes a critical enabler of enterprise maturity. Governance is no longer simply a safeguard—it’s a growth lever.

Effective AI governance enhances regulatory compliance by ensuring that AI deployments align with complex and evolving legal landscapes, including GDPR, HIPAA, and the forthcoming EU AI Act. With appropriate controls in place, businesses can innovate without fear of violating legal or ethical norms.

Operationally, governance streamlines workflows and reduces inefficiencies. Organizations minimize duplication and avoid reactive problem-solving by codifying review protocols, embedding oversight tools, and standardizing policies across departments. This saves time and boosts confidence in AI systems as trustworthy partners in core operations.

Strategically, a well-governed AI program supports the organization’s overarching mission. It ensures that generative AI augments, not undermines, business goals. Clear usage policies prevent internal conflicts and reinforce consistency across use cases.

Most importantly, governance builds trust. Whether with regulators, customers, or internal teams, transparency and accountability are foundational to adoption. A mature governance framework sends a strong signal: this organization takes its AI responsibilities seriously.

When AI-related issues arise, as they inevitably will, a robust governance structure allows for fast, responsible, well-documented responses, containing risk before it becomes a crisis.

A Practical Roadmap to Governance: From Audit to Scale

Developing a governance strategy for generative AI begins with insight and ends with institutionalized practice. Organizations should start by conducting a comprehensive audit of existing AI use. This means mapping out where generative models are deployed, who uses them, what data they access, and how their outputs are reviewed and stored. Without this visibility, it is impossible to govern responsibly.

Once this landscape is clear, the next step is policy development. Organizations must craft guidelines addressing acceptable use, ethical considerations, data handling, and risk tolerance. These policies should align with internal values and external standards, such as the NIST AI Risk Management Framework and ISO 42001.

Organizations should introduce governance tools to operationalize these policies, such as explainability software, content moderation filters, and model monitoring platforms. These technologies allow teams to enforce rules, monitor outputs, and provide audit-ready documentation.

Institutional support is also key. Building a governance committee or assigning clear roles to cross-functional stakeholders ensures continuity and authority. Legal, compliance, data science, product, and operations must all participate. This committee can evaluate high-risk use cases, approve exceptions, and lead periodic reviews.

Equally important is regulatory alignment. In regulated sectors, governance strategies must map explicitly to relevant laws: HIPAA in healthcare, GLBA in financial services, and the AI Act for operations within or adjacent to Europe. This not only reduces legal exposure but also demonstrates good faith compliance with regulators.

Finally, implementation should be iterative, pilot governance structures in a limited environment, perhaps a high-risk or high-impact workflow. If governance is to be scaled enterprise-wide, measure outcomes, gather feedback, and refine processes only after this test phase.

This deliberate, informed approach transforms governance from a reactive necessity into a proactive framework for ethical, scalable innovation.

Getting Started with Generative AI Governance

For organizations new to governance, the priority is identifying where AI is being used and who is responsible for oversight. A governance playbook should define roles, responsibilities, and review protocols. Suggested first steps:

- Inventory current generative AI tools and their applications.

- Assess risks tied to data sensitivity, output use, and model behavior.

- Select a governance platform or partner to support policy deployment and monitoring.

- Launch pilot programs in high-value, high-risk areas (e.g., clinical documentation, marketing content).

Getting Started with the Pacific AI Policy Suite

The initial step for organizations embarking on responsible AI governance is establishing clarity, understanding the applicable laws, identifying where generative AI is utilized, and determining oversight responsibilities. The Pacific AI Policy Suite is a foundational resource, offering a unified framework that simplifies compliance with over 80+ AI-related laws, regulations, and standards.

1. Adopt the AI Policy Suite

Begin by integrating the Pacific AI Policy Suite into your organization’s governance framework. Designed to simplify complexity, the suite turns legal and regulatory obligations into clear, enforceable policies. It captures requirements from over 80+ AI laws and standards, ranging from the Americans with Disabilities Act (ADA) and California SB 942 to global benchmarks like the EU AI Act. It aligns them with practical controls that can be deployed organization-wide. This removes the need to track each regulation manually and ensures your policies evolve with the law. By leveraging this structured, regularly updated framework, organizations can stay legally compliant, meet the expectations of auditors and regulators, and build AI systems that reflect ethical and responsible practices by design.

2. Map Your AI Landscape

Conduct a comprehensive audit to identify:

- All generative AI tools are in use.

- Departments and personnel utilize these tools.

- Data inputs and outputs associated with AI applications.

This mapping is crucial for assessing exposure and designing appropriate controls.

3. Translate Regulation into Practice

Utilize the Policy Suite to convert abstract legal mandates into practical workflows:

- Implement policies that address data privacy, transparency, and accountability.

- Establish procedures for human oversight and review of AI outputs.

- Develop audit trails to document AI decision-making processes.

This approach ensures that compliance is theoretical and embedded in daily operations.

4. Pilot in High-Risk Areas

Initiate pilot programs in departments where AI poses significant risks, such as:

- Clinical documentation in healthcare.

- Financial decision-making processes.

- Public communications in government agencies.

These pilots allow for testing governance structures and refining policies before broader implementation.

5. Signal Accountability

Adopting the AI Policy Suite signals to stakeholders, regulators, customers, and partners that the organization is proactive in managing AI risks. It demonstrates a commitment to transparency, ethical practices, and compliance with evolving legal landscapes.

By following these steps, organizations can establish a robust governance framework that mitigates risks and positions them as leaders in responsible AI deployment.

Why Every Business Needs a Generative AI Governance Strategy

The accelerating integration of generative AI into business operations has brought both unprecedented capabilities and equally significant risks. From automating content generation to enabling decision support systems, these technologies are quickly becoming central to competitive advantage. However, without transparent governance, organizations expose themselves to various vulnerabilities—regulatory breaches, data privacy violations, biased outputs, and erosion of stakeholder trust.

Responsible AI governance isn’t just a protective mechanism; it’s a strategic asset. Businesses that embed robust oversight into their AI programs can move faster and more confidently. They gain the ability to innovate within defined ethical and legal boundaries, to meet compliance requirements proactively rather than reactively, and to reassure clients, partners, and regulators that AI is being deployed thoughtfully and transparently.

Effective governance is holistic. It spans continuous model monitoring, establishing clear ethical policies, structured risk assessments, cross-functional oversight, and well-maintained audit trails. It transforms generative AI from a potential liability into a controlled, accountable, and high-performing component of enterprise strategy.

Industries such as healthcare, finance, and government already show how governance enables safe and scalable adoption. These sectors face high stakes and tight regulations, yet they use governance frameworks not to slow progress, but to build systems they can trust—and prove trustworthy to others.

Ultimately, every business benefits from a governance strategy that aligns AI use with its values, responsibilities, and goals. Governance clarifies who is accountable, what risks are acceptable, and how to operationalize trust.

To learn more, watch our webinar on unifying 70+ AI laws and standards into a single governance suite. For practical tools, download the Pacific AI Policy Suite to start building your governance program today.

FAQ

What unique challenges does generative AI present compared to traditional AI systems?

Generative AI can produce deepfakes, disinformation, and hallucinations, posing increased risks in areas like privacy, safety, and accuracy—necessitating stronger controls than traditional AI.

What are the core components of a generative AI governance framework?

A robust framework includes transparency/explainability, fairness, privacy/data protection, accountability/oversight, and safety/security measures.

Why is adaptive governance essential for generative AI?

Due to generative AI’s rapid evolution and expanding capabilities, governance must be flexible—continuously updating risk assessments, policies, and monitoring practices to keep pace.

How can organizations implement generative AI governance effectively?

Start by mapping AI systems, forming cross-functional governance councils, defining clear policies, piloting in high-risk areas, and establishing accountability mechanisms.

Which industries have adopted specialized generative AI governance practices?

Industries like healthcare (patient data protection, clinical decision support), telecommunications (customer bots, network optimization), and government (policy analysis, service delivery) are using domain-specific guardrails.